Local AI Models in Browsers (Chrome Canary)

Browsers and operating systems are increasingly expected to have access to language models. This could really boost productivity, making tasks like translation and summarisation a lot easier. as you probably know Apple has been trying to bring AI models into their devices using Apple Intelligence.

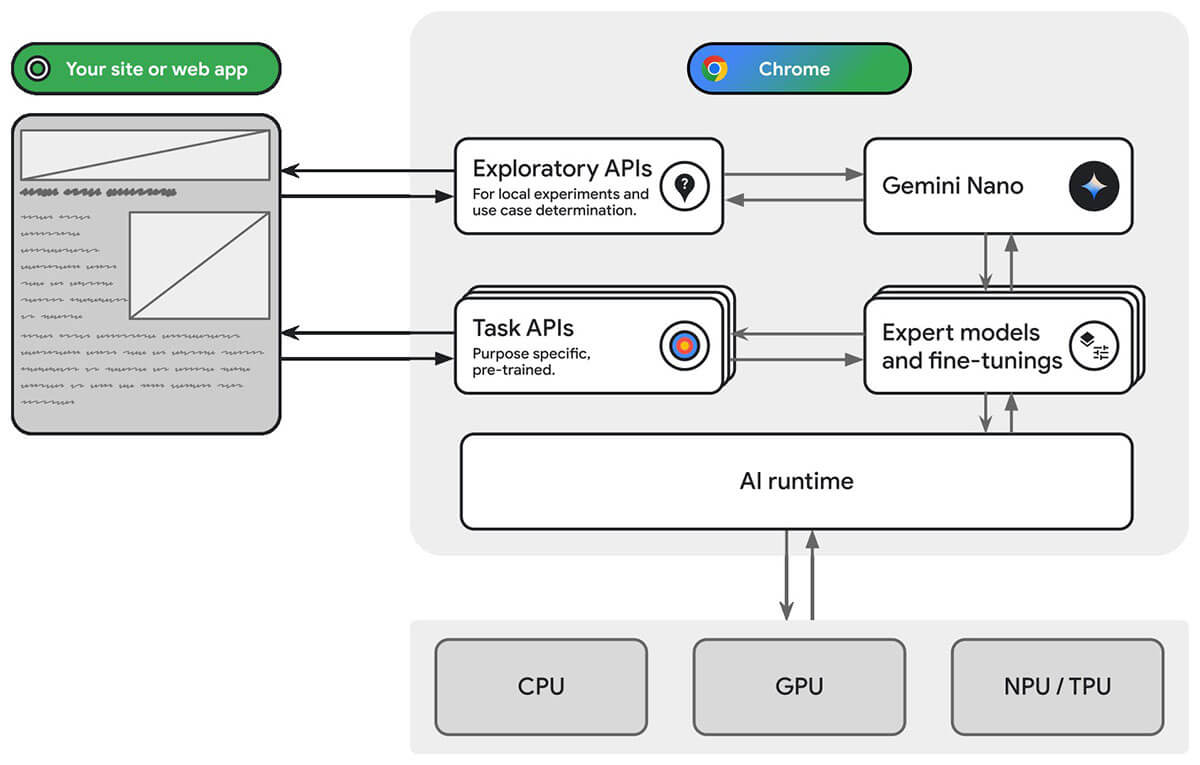

Google, on the other hand, is adding a local LLM (Gemini Nano) directly into its web browser; It’s quite experimental at the moment (it’s behind some flags and you have to enable them) but it’s a big step forward. For us developers, this means we can build even better front-end experiences with almost no latency when getting results from the LLM. For instance, you could type in English and get real-time translations into other languages. With language models baked into browsers, there’s no need to rely on external API calls to OpenAI, Google Gemini, or others. As browsers start to natively support local LLMs pre-installed within them, this opens up a whole new world for web developers. Of course, remote LLMs still let us fine-tune models and do advanced things like RAG. Right now, the local version of Google Gemini which comes with Google Canary/Dev is a bit limited, it has smaller context windows and fewer tokens. I think in the future, we might be able to do those advanced RAG-like tasks locally as well.

You might be wondering why we even need this on-device AI and why it’s so important. The main reason is privacy. When handling sensitive data, we don’t want to expose that information to remote LLMs. This is especially crucial for industries like healthcare, where keeping patient data secure is a top priority. With on-device LLMs, we can ensure that data never leaves the device. This is a big win for privacy and security.

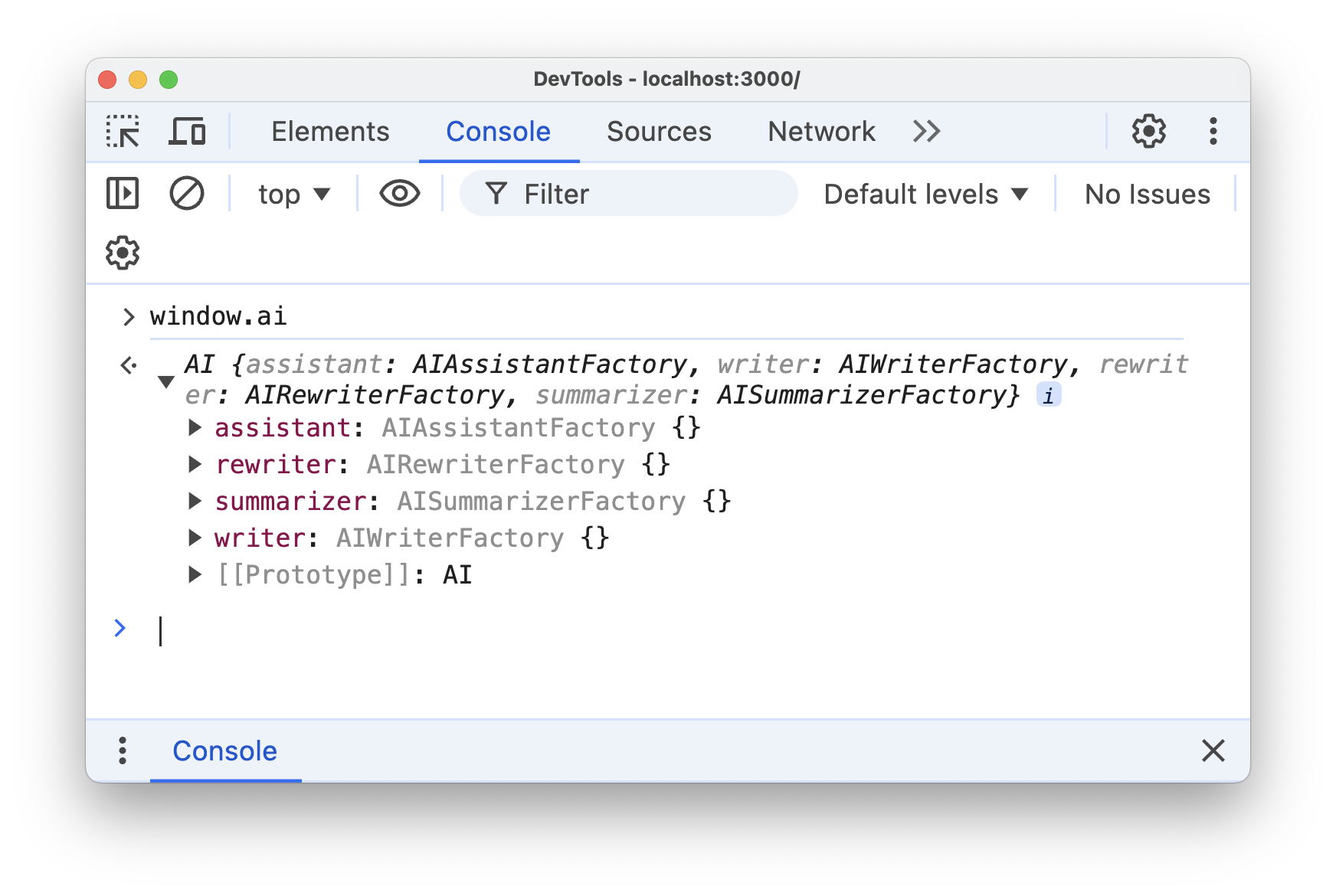

This experimental feature gives us a set of utility methods under the ai namespace to interact with the local LLM (Gemini Nano):

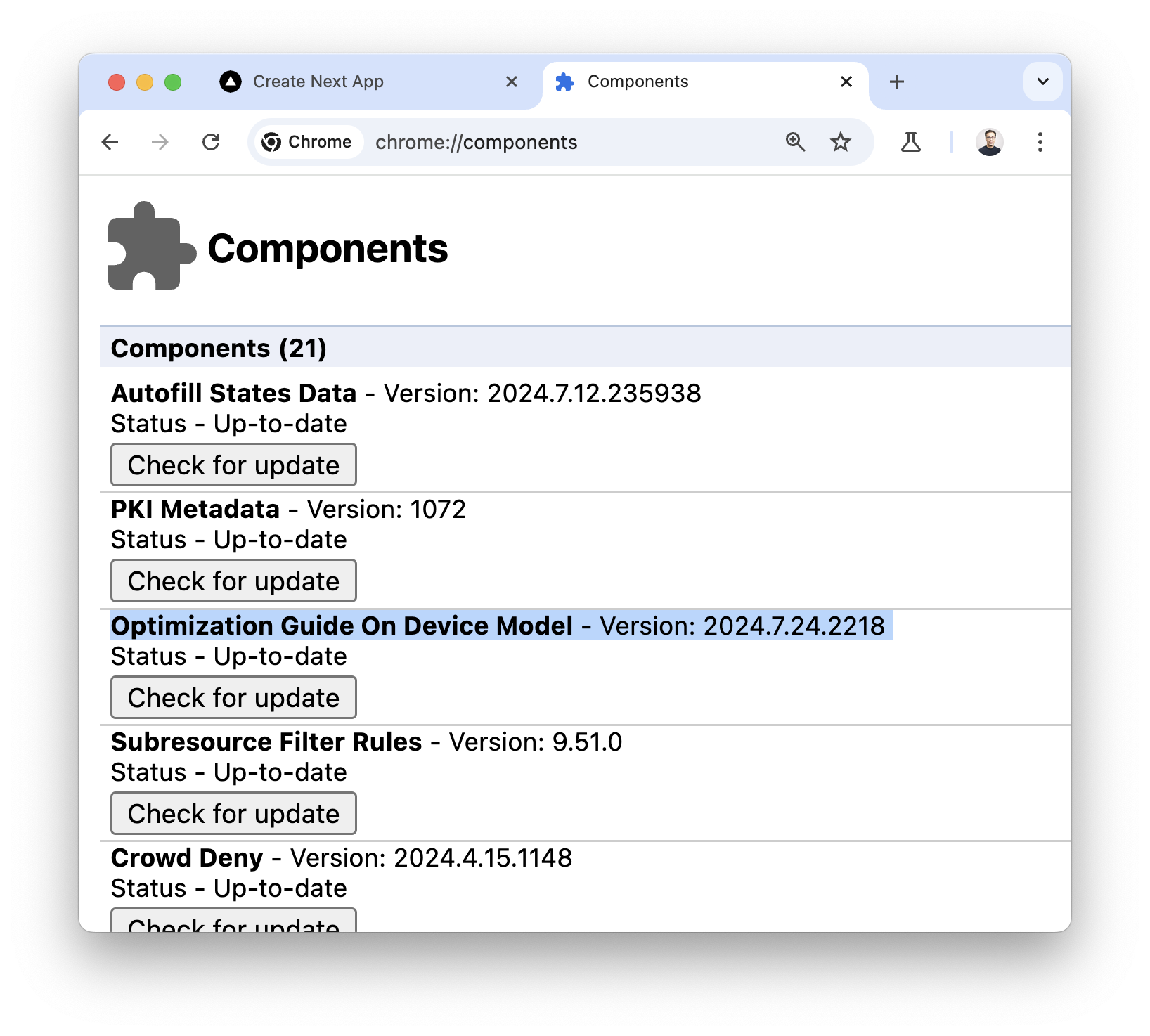

Working with the local LLM is a bit tricky, as it only works on Chrome Canary or Dev. You must enable some flags:

chrome://flags/#optimization-guide-on-device-model (set to "Enabled BypassPerfRequirement")

chrome://flags/#prompt-api-for-gemini-nano (set to "Enabled")

Then install the modal:

The project I am working on is a simple eshop that uses the LLM to generate reviews for products and summarise them. Here’s a quick demo:

- Writer API:

- Summarisation API:

Both APIs are quite simple to use:

const handleGenerateReview = async () => {

try {

const writer = await window.ai.writer.create();

const stream = await writer.writeStreaming(

`Based on the following product details:

Title: ${product.title}

Description: ${product.description}

I have rated it ${newReview.rating} stars out of 5.

Please write a review reflecting this rating. Make sure to:

Mention the rating number explicitly in the review.

Tailor the tone to the rating given.

Include (must) a relevant emoji to match the overall sentiment.

Rating Guide:

1 star: Terrible 😡

2 stars: Bad 😞

3 stars: Average 😐

4 stars: Good 🙂

5 stars: Excellent 😍

`

);

for await (const chunk of stream) {

setNewReview((prev) => ({

...prev,

content: "",

}));

setNewReview((prev) => ({

...prev,

content: prev.content + chunk,

}));

}

} catch (error) {}

};

export const ShowSummary = (props: ShowSummaryProps) => {

const [summary, setSummary] = useState<string>("");

const [loading, setLoading] = useState<boolean>(false);

const generateSummary = async () => {

try {

setLoading(true);

const summarizer = await window.ai.summarizer.create();

const result = await summarizer.summarize(`

Here is a list of customer reviews for the product:

Reviews: ${props.context}

Please:

Summarise the key points and sentiments from these reviews.

Provide a clear takeaway on whether the product is worth buying or not, based on the overall consensus.

Make sure to highlight any recurring themes, positives, or concerns that are mentioned frequently.

Result should be super concise in one paragraph. The result must be in markdown table format, add an hr before the table.

`);

setSummary(result);

setLoading(false);

} catch (error) {}

};

return (

<Dialog

open={props.isModalOpen}

onOpenChange={() => {

props.setIsModalOpen(!props.isModalOpen);

generateSummary();

}}

>

...

</Dialog>

);

};

Personally, I think this is huge. It makes front-end development easier and gives users a smoother overall experience with great UX.